Can chatbots craft correct code?

I recently attended the AI Engineer Code Summit in New York, an invite-only gathering of AI leaders and engineers. One theme emerged repeatedly in conversations with attendees building with AI: the belief that we’re approaching a future where developers will never need to look at code again. When I pressed these proponents, several made a similar argument:

Forty years ago, when high-level programming languages like C became increasingly popular, some of the old guard resisted because C gave you less control than assembly. The same thing is happening now with LLMs.

On its face, this analogy seems reasonable. Both represent increasing abstraction. Both initially met resistance. Both eventually transformed how we write software. But this analogy really thrashes my cache because it misses a fundamental distinction that matters more than abstraction level: determinism.

The difference between compilers and LLMs isn’t just about control or abstraction. It’s about semantic guarantees. And as I’ll argue, that difference has profound implications for the security and correctness of software.

The compiler’s contract: Determinism and semantic preservation

Compilers have one job: preserve the programmer’s semantic intent while changing syntax. When you write code in C, the compiler transforms it into assembly, but the meaning of your code remains intact. The compiler might choose which registers to use, whether to inline a function, or how to optimize a loop, but it doesn’t change what your program does. If the semantics change unintentionally, that’s not a feature. That’s a compiler bug.

This property, semantic preservation, is the foundation of modern programming. When you write result = x + y in Python, the language guarantees that addition happens. The interpreter might optimize how it performs that addition, but it won’t change what operation occurs. If it did, we’d call that a bug in Python.

The historical progression from assembly to C to Python to Rust maintained this property throughout. Yes, we’ve increased abstraction. Yes, we’ve given up fine-grained control. But we’ve never abandoned determinism. The act of programming remains compositional: you build complex systems from simpler, well-defined pieces, and the composition itself is deterministic and unambiguous.

There are some rare conditions where the abstraction of high-level languages prevents the preservation of the programmer’s semantic intent. For example, cryptographic code needs to run in a constant amount of time over all possible inputs; otherwise, an attacker can use the timing differences as an oracle to do things like brute-force passwords. Properties like “constant time execution” aren’t something most programming languages allow the programmer to specify. Until very recently, there was no good way to force a compiler to emit constant-time code; developers had to resort to using dangerous inline assembly. But with Trail of Bits’ new extensions to LLVM, we can now have compilers preserve this semantic property as well.

As I wrote back in 2017 in “Automation of Automation,” there are fundamental limits on what we can automate. But those limits don’t eliminate determinism in the tools we’ve built; they simply mean we can’t automatically prove every program correct. Compilers don’t try to prove your program correct; they just faithfully translate it.

Why LLMs are fundamentally different

LLMs are nondeterministic by design. This isn’t a bug; it’s a feature. But it has consequences we need to understand.

Nondeterminism in practice

Run the same prompt through an LLM twice, and you’ll likely get different code. Even with temperature set to zero, model updates change behavior. The same request to “add error handling to this function” could mean catching exceptions, adding validation checks, returning error codes, or introducing logging, and the LLM might choose differently each time.

This is fine for creative writing or brainstorming. It’s less fine when you need the semantic meaning of your code to be preserved.

The ambiguous input problem

Natural language is inherently ambiguous. When you tell an LLM to “fix the authentication bug,” you’re assuming it understands:

- Which authentication system you’re using

- What “bug” means in this context

- What “fixed” looks like

- Which security properties must be preserved

- What your threat model is

The LLM will confidently generate code based on what it thinks you mean. Whether that matches what you actually mean is probabilistic.

The unambiguous input problem (which isn’t)

“Okay,” you might say, “but what if I give the LLM unambiguous input? What if I say ‘translate this C code to Python’ and provide the exact C code?”

Here’s the thing: even that isn’t as unambiguous as it seems. Consider this C code:

// C code

int increment(int n) {

return n + 1;

}I asked Claude Opus 4.5 (extended thinking), Gemini 3 Pro, and ChatGPT 5.2 to translate this code to Python, and they all produced the same result:

# Python code

def increment(n: int) -> int:

return n + 1It is subtle, but the semantics have changed. In Python, signed integer arithmetic has arbitrary precision. In C, overflowing a signed integer is undefined behavior: it might wrap, might crash, might do literally anything. In Python, it’s well defined: you get a larger integer. None of the leading foundation models caught this difference. Why not? It depends on whether they were trained on examples highlighting this distinction, whether they “remember” the difference at inference time, and whether they consider it important enough to flag.

There exist an infinite number of Python programs that would behave identically to the C code for all valid inputs. An LLM is not guaranteed to produce any of them.

In fact, it’s impossible for an LLM to exactly translate the code without knowing how the original C developer expected or intended the C compiler to handle this edge case. Did the developer know that the inputs would never cause the addition to overflow? Or perhaps they inspected the assembly output and concluded that their specific compiler wraps to zero on overflow, and that behavior is required elsewhere in the code?

A case study: When Claude “fixed” a bug that wasn’t there

Let me share a recent experience that crystallizes this problem perfectly.

A developer suspected that a new open-source tool had stolen and open-sourced their code without a license. They decided to use Vendetect, an automated source code plagiarism detection tool I developed at Trail of Bits. Vendetect is designed for exactly this use case: you point it at two Git repos, and it finds portions of one repo that were copied from the other, including the specific offending commits.

When the developer ran Vendetect, it failed with a stack trace.

The developer, reasonably enough, turned to Claude for help. Claude analyzed the code, examined the stack trace, and quickly identified what it thought was the culprit: a complex recursive Python function at the heart of Vendetect’s Git repo analysis. Claude helpfully submitted both a GitHub issue and an extensive pull request “fixing” the bug.

I was assigned to review the PR.

First, I looked at the GitHub issue. It had been months since I’d written that recursive function, and Claude’s explanation seemed plausible! It really did look like a bug. When I checked out the code from the PR, the crash was indeed gone. No more stack trace. Problem solved, right?

Wrong.

Vendetect’s output was now empty. When I ran the unit tests, they were failing. Something was broken.

Now, I know recursion in Python is risky. Python’s stack frames are large enough that you can easily overflow the stack with deep recursion. However, I also knew that the inputs to this particular recursive function were constrained such that it would never recurse more than a few times. Claude either missed this constraint or wasn’t convinced by it. So Claude painfully rewrote the function to be iterative.

And broke the logic in the process.

I reverted to the original code on the main branch and reproduced the crash. After minutes of debugging, I discovered the actual problem: it wasn’t a bug in Vendetect at all.

The developer’s input repository contained two files with the same name but different casing: one started with an uppercase letter, the other with lowercase. Both the developer and I were running macOS, which uses a case-insensitive filesystem by default. When Git tries to operate on a repo with a filename collision on a case-insensitive filesystem, it throws an error. Vendetect faithfully reported this Git error, but followed it with a stack trace to show where in the code the Git error occurred.

I did end up modifying Vendetect to handle this edge case and print a more intelligible error message that wasn’t buried by the stack trace. But the bug that Claude had so confidently diagnosed and “fixed” wasn’t a bug at all. Claude had “fixed” working code and broken actual functionality in the process.

This experience crystallized the problem: LLMs approach code the way a human would on their first day looking at a codebase: with no context about why things are the way they are.

The recursive function looked risky to Claude because recursion in Python can be risky. Without the context that this particular recursion was bounded by the nature of Git repository structures, Claude made what seemed like a reasonable change. It even “worked” in the sense that the crash disappeared. Only thorough testing revealed that it broke the core functionality.

And here’s the kicker: Claude was confident. The GitHub issue was detailed. The PR was extensive. There was no hedging, no uncertainty. Just like a junior developer who doesn’t know what they don’t know.

The scale problem: When context matters most

LLMs work reasonably well on greenfield projects with clear specifications. A simple web app, a standard CRUD interface, boilerplate code. These are templates the LLM has seen thousands of times. The problem is, these aren’t the situations where developers need the most help.

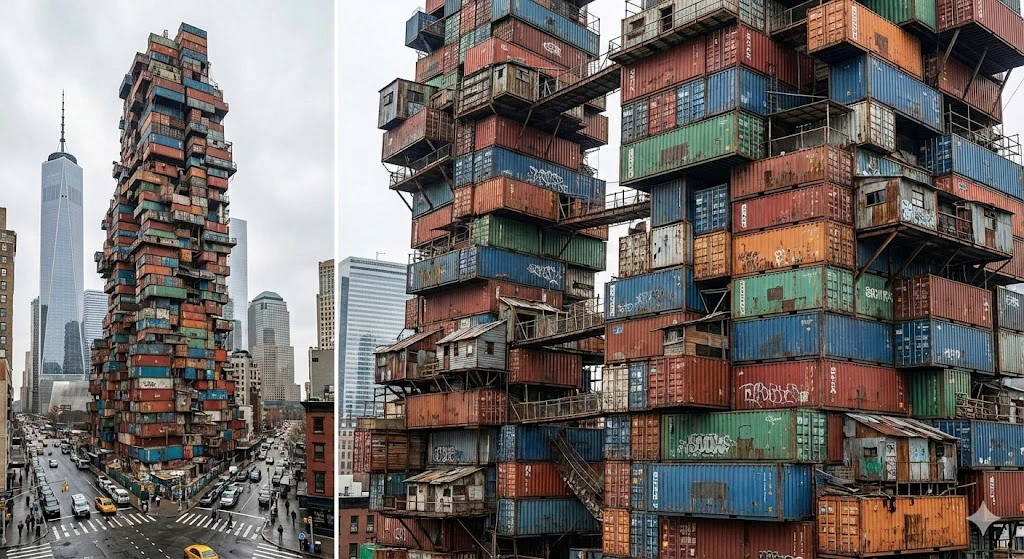

Consider software architecture like building architecture. A prefabricated shed works well for storage: the requirements are simple, the constraints are standard, and the design can be templated. This is your greenfield web app with a clear spec. LLMs can generate something functional.

But imagine iteratively cobbling together a skyscraper with modular pieces and no cohesive plan from the start. You literally end up with Kowloon Walled City: functional, but unmaintainable.

And what about renovating a 100-year-old building? You need to know:

- Which walls are load-bearing

- Where utilities are routed

- What building codes applied when it was built

- How previous renovations affected the structure

- What materials were used and how they’ve aged

The architectural plans—the original, deterministic specifications—are essential. You can’t just send in a contractor who looks at the building for the first time and starts swinging a sledgehammer based on what seems right.

Legacy codebases are exactly like this. They have:

- Poorly documented internal APIs

- Brittle dependencies no one fully understands

- Historical context that doesn’t fit in any context window

- Constraints that aren’t obvious from reading the code

- Business logic that emerged from years of incremental requirements changes and accreted functionality

When you have a complex system with ambiguous internal APIs, where it’s unclear which service talks to what or for what reason, and the documentation is years out of date and too large to fit in an LLM’s context window, this is exactly when LLMs are most likely to confidently do the wrong thing.

The Vendetect story is a microcosm of this problem. The context that mattered—that the recursion was bounded by Git’s structure, that the real issue was a filesystem quirk—wasn’t obvious from looking at the code. Claude filled in the gaps with seemingly reasonable assumptions. Those assumptions were wrong.

The path forward: Formal verification and new frameworks

I’m not arguing against LLM coding assistants. In my extensive use of LLM coding tools, both for code generation and bug finding, I’ve found them genuinely useful. They excel at generating boilerplate code, suggesting approaches, serving as a rubber duck for debugging, and summarizing code. The productivity gains are real.

But we need to be clear-eyed about their fundamental limitations.

Where LLMs work well today

LLMs are most effective when you have:

- Clean, well-documented codebases with idiomatic code

- Greenfield projects

- Excellent test coverage that catches errors immediately

- Tasks where errors are quickly obvious (it crashes, the output is wrong), allowing the LLM to iteratively climb toward the goal

- Pair-programming style review by experienced developers who understand the context

- Clear, unambiguous specifications written by experienced developers

The last two are absolutely necessary for success, but are often not sufficient. In these environments, LLMs can accelerate development. The generated code might not be perfect, but errors are caught quickly and the cost of iteration is low.

What we need to build

If the ultimate goal is to raise the level of abstraction for developers above reviewing code, we will need these frameworks and practices:

Formal verification frameworks for LLM output. We will need tools that can prove semantic preservation—that the LLM’s changes maintain the intended behavior of the code. This is hard, but it’s not impossible. We already have formal methods for certain domains; we need to extend them to cover LLM-generated code.

Better ways to encode context and constraints. LLMs need more than just the code; they need to understand the invariants, the assumptions, the historical context. We need better ways to capture and communicate this.

Testing frameworks that go beyond “does it crash?” We need to test semantic correctness, not just syntactic validity. Does the code do what it’s supposed to do? Are the security properties maintained? Are the performance characteristics acceptable? Unit tests are not enough.

Metrics for measuring semantic correctness. “It compiles” isn’t enough. Even “it passes tests” isn’t enough. We need ways to quantify whether the semantics have been preserved.

Composable building blocks that are secure by design. Instead of allowing the LLM to write arbitrary code, we will need the LLM to instead build with modular, composable building blocks that have been verified as secure. A bit like how industrial supplies have been commoditized into Lego-like parts. Need a NEMA 23 square body stepper motor with a D profile shaft? No need to design and build it yourself—you can buy a commercial-off-the-shelf motor from any of a dozen different manufacturers and they will all bolt into your project just as well. Likewise, LLMs shouldn’t be implementing their own authentication flows. They should be orchestrating pre-made authentication modules.

The trust model

Until we have these frameworks, we need a clear mental model for LLM output: Treat it like code from a junior developer who’s seeing the codebase for the first time.

That means:

- Always review thoroughly

- Never merge without testing

- Understand that “looks right” doesn’t mean “is right”

- Remember that LLMs are confident even when wrong

- Verify that the solution solves the actual problem, not a plausible-sounding problem

As a probabilistic system, there’s always a chance an LLM will introduce a bug or misinterpret its prompt. (These are really the same thing.) How small does that probability need to be? Ideally, it would be smaller than a human’s error rate. We’re not there yet, not even close.

Conclusion: Embracing verification in the age of AI

The fundamental computational limitations on automation haven’t changed since I wrote about them in 2017. What has changed is that we now have tools that make it easier to generate incorrect code confidently and at scale.

When we moved from assembly to C, we didn’t abandon determinism; we built compilers that guaranteed semantic preservation. As we move toward LLM-assisted development, we need similar guarantees. But the solution isn’t to reject LLMs! They offer real productivity gains for certain tasks. We just need to remember that their output is only as trustworthy as code from someone seeing the codebase for the first time. Just as we wouldn’t merge a PR from a new developer without review and testing, we can’t treat LLM output as automatically correct.

If you’re interested in formal verification, automated testing, or building more trustworthy AI systems, get in touch. At Trail of Bits, we’re working on exactly these problems, and we’d love to hear about your experiences with LLM coding tools, both the successes and the failures. Because right now, we’re all learning together what works and what doesn’t. And the more we share those lessons, the better equipped we’ll be to build the verification frameworks we need.