Supply chain attacks are exploiting our assumptions

Every time you run cargo add or pip install, you are taking a leap of faith. You trust that the code you are downloading contains what you expect, comes from who you expect, and does what you expect. These expectations are so fundamental to modern development that we rarely think about them. However, attackers are systematically exploiting each of these assumptions.

In 2024 alone, PyPI and npm removed thousands of malicious packages; multiple high-profile projects had malware injected directly into the build process; and the XZ Utils backdoor nearly made it into millions of Linux systems worldwide.

Dependency scanning only catches known vulnerabilities. It won’t catch when a typosquatted package steals your credentials, when a compromised maintainer publishes malware, or when attackers poison the build pipeline itself. These attacks succeed because they exploit the very trust that makes modern software development possible.

This post breaks down the trust assumptions that make the software supply chain vulnerable, analyzes recent attacks that exploit them, and highlights some of the cutting-edge defenses being built across ecosystems to turn implicit trust into explicit, verifiable guarantees.

Implicit trust

For many developers, the software supply chain begins and ends with the software bill of materials (SBOM) and dependency scanning, which together answer two fundamental questions: what code do you have, and does it contain known vulnerabilities? But understanding what you have is the bare minimum. As sophisticated attacks become more common, you also need to understand where your code comes from and how it gets to you.

You trust that you are installing the package you expect. You assume that running cargo add rustdecimal is safe because rustdecimal is a well-known and widely used library. Or wait, maybe it’s spelled rust_decimal?

You trust that packages are published by the package maintainers. When a popular package starts shipping with a precompiled binary to save build time, you may decide to trust the package author. However, many registries lack strong verification that publishers are who they claim to be.

You trust that packages are built from the package source code. You may work on a security-conscious team that audits code changes in the public repository before upgrading dependencies. But this is meaningless if the distributed package was built from code that does not appear in the repository.

You trust the maintainers themselves. Ultimately, installing third-party code means trusting package maintainers. It is not practical to audit every line of code you depend on. We assume that the maintainers of well-established and widely adopted packages will not suddenly decide to add malicious code.

These assumptions extend beyond traditional package managers. The same trust exists when you run a GitHub action, install a tool with Homebrew, or execute the convenient curl ... | bash installation script. Understanding these implicit trust relationships is the first step in assessing and mitigating supply chain risk.

Recent attacks

Attackers are exploiting trust assumptions across every layer of the supply chain. Recent incidents range from simple typosquatting to multiyear campaigns, demonstrating how attackers’ tactics are evolving and growing more complex.

Deceptive doubles

Typosquatting involves publishing a malicious package with a name similar to that of a legitimate package. Running cargo add rustdecimal instead of rust_decimal could install malware instead of the expected legitimate library. This exact attack occurred on crates.io in 2022. The malicious rustdecimal mimicked the popular rust_decimal package but contained a Decimal::new function that executed a malicious binary when called.

The simplicity of the attack has made it easy for attackers to launch numerous large-scale campaigns, particularly against PyPI and npm. Since 2022, there have been multiple typosquatting campaigns targeting packages that account for a combined 1.2 billion weekly downloads. Thousands of malicious packages have been published to PyPI and npm alone. This type of attack happens so frequently that there are too many examples to list here. In 2023, researchers documented a campaign that registered 900 typosquats of 40 popular PyPI packages and discovered malware being staged on crates.io. The attacks have only intensified, with 500 malicious packages published in a single 2024 campaign.

Dependency confusion takes a different approach, exploiting package manager logic directly. Security researcher Alex Birsan demonstrated and named this type of attack in 2021. He discovered that many organizations use names for internal packages that are either leaked or guessable. By publishing packages with the same names as these internal packages to public registries, Birsan was able to trick package managers into downloading his version instead. Birsan’s proof of concept identified vulnerabilities across three programming languages and 35 organizations, including Shopify, Apple, Netflix, Uber, and Yelp.

In 2022, an attacker used this technique to include malicious code in the nightly releases of PyTorch for five days. An internal dependency named torchtriton was hosted from PyTorch’s nightly package index. An attacker published a malicious package with the same name to PyPI, which took precedence. As a result, the nightly versions of PyTorch contained malware for five days before the malware was caught.

While these attacks occur at the point of installation, other attacks take a more direct approach by compromising the publishing process itself.

Stolen secrets

Compromised accounts are another frequent attack vector. Attackers acquire a leaked key, stolen token, or guessed password, and are able to directly publish malicious code on behalf of a trusted entity. A few recent incidents show the scale of this type of attack:

- ctrl/tinycolor (September 2025): Self-propagating malware harvested npm API credentials and used the credentials to publish additional malicious packages. Over 40 packages were compromised, accounting for more than 2 million weekly downloads.

- Nx (August 2025): A compromised token allowed attackers to publish malicious versions containing scripts leveraging already installed AI CLI tools (Claude, Gemini, Q) for reconnaissance, stealing cryptocurrency wallets, GitHub/npm tokens, and SSH keys from thousands of developers before exfiltrating data to public GitHub repositories.

- rand-user-agent (May 2025): A malicious release containing malware was caught only after researchers noticed recent releases despite no changes to the source code in months.

- rspack (December 2024): Stolen npm tokens enabled attackers to publish cryptocurrency miners in packages with 500,000 combined weekly downloads.

- UAParser.js (October 2021): A compromised npm token was used to publish malicious releases containing a cryptocurrency miner. The library had millions of weekly downloads at the time of the attack.

- PHP Git server (March 2021): Stolen credentials allowed attackers to inject a backdoor directly into PHP’s source code. Thankfully, the content of the changes was easily spotted and removed by the PHP team before any release.

- Codecov (January 2021): Attackers found a deployment key in a public Docker image layer and used it to modify Codecov’s Bash Uploader tool, silently exfiltrating environment variables and API keys for months before discovery.

Stolen secrets remain one of the most reliable supply chain attack vectors. But as organizations implement stronger authentication and better secret management, attackers are shifting from stealing keys to compromising the systems that use them.

Poisoned pipelines

Instead of stealing credentials, some attackers have managed to distribute malware through legitimate channels by compromising the build and distribution systems themselves. Code reviews and other security checks are bypassed entirely by directly injecting malicious code into CI/CD pipelines.

The SolarWinds attack in 2020 is one of the well-known attacks in this category. Attackers compromised the build environment and inserted malicious code directly into the Orion software during compilation. The malicious version of Orion was then signed and distributed through SolarWinds’ legitimate update channels. The attack affected thousands of organizations including multiple Fortune 500 companies and government agencies.

More recently, in late 2024, an attacker compromised the Ultralytics build pipeline to publish multiple malicious versions. The attacker used a template injection in the project’s GitHub Actions to gain access to the CI/CD pipeline and poisoned the GitHub Actions cache to include malicious code directly in the build. At the time of the attack, Ultralytics had more than one million weekly downloads.

In 2025, an attacker modified the reviewdog/actions-setup GitHub action v1 tag to point to a malicious version containing code to dump secrets. This likely led to the compromise of another popular action, tj-actions/changed-files, through its dependency on tj-actions/eslint-changed-files, which in turn relied on the compromised reviewdog action. This cascading compromise affected thousands of projects using the changed-files action.

While poisoned pipeline attacks are relatively rare compared to typosquatting or credential theft, they represent an escalation in attacker sophistication. As stronger defenses are put in place, attackers are forced to move up the supply chain. The most determined attackers are willing to spend years preparing for a single attack.

Malicious maintainers

The XZ Utils backdoor, discovered in March 2024, nearly compromised millions of Linux systems worldwide. The attacker spent over two years making legitimate contributions to the project before gaining maintainer access. They then abused this trust to insert a sophisticated backdoor through a series of seemingly innocent commits that would have granted remote access to any system using the compromised version.

Ultimately, you must trust the maintainers of your dependencies. Secure build pipelines cannot protect against a trusted maintainer who decides to insert malicious code. With open-source maintainers increasingly overwhelmed, and with AI tools making it easier to generate convincing contributions at scale, this trust model is facing unprecedented challenges.

New defenses

As attacks grow more sophisticated, defenders are building tools to match. These new approaches are making trust assumptions explicit and verifiable rather than implicit and exploitable. Each addresses a different layer of the supply chain where attackers have found success.

TypoGard and Typomania

Most package managers now include some form of typosquatting protection, but they typically use traditional similarity checks like those measuring Levenshtein distance, which generate excessive false positives that need to be manually reviewed.

TypoGard fills this gap by using multiple context-aware metrics, like the following, to detect typosquatting packages with a low false positive rate and minimal overhead:

- Repeated characters (e.g.,

rustdeciimal) - Common typos based on keyboard layout

- Swapped characters (e.g.,

reqeustsinstead ofrequests) - Package popularity thresholds to focus on high-risk targets

This tool targets npm, but the concepts can be extended to other languages. The Rust Foundation published a Rust port, Typomania, that has been adopted by crates.io and has successfully caught multiple malicious packages.

Zizmor

Zizmor is a static analysis tool for GitHub Actions. Actions have a large surface area, and writing complex workflows can be difficult and error-prone. There are many subtle ways workflows can introduce vulnerabilities.

For example, Ultralytics was compromised via template injection in one of its workflows.

- name: Commit and Push Changes

if: (... || github.event_name == 'pull_request_target' || ...

run: |

...

git pull origin ${{ github.head_ref || github.ref }}

...Workflows triggered by pull_request_target events run with write permission access to repository secrets. An attacker opened a pull request from a branch with a malicious name. When the workflow ran, the github.head_ref variable expanded to the malicious branch name and executed as part of the run command with the workflow’s elevated privileges.

The reviewdog/actions-setup attack was also carried out in part by changing the action’s v1 tag to point to a malicious commit. Anyone using reviewdog/actions-setup@v1 in their workflows silently started getting a malicious version without making any changes to their own workflows.

Zizmor flags all of the above. It includes a dangerous-trigger rule to flag workflows triggered by pull_request_target, a template-injection rule, and an unpinned-uses check that would have warned actions against using mutable references (like tags or branch names) when using reviewdog/actions-setup@v1.

PyPI Trusted Publishing and attestations

PyPI has taken significant steps to address several implicit trust assumptions through two complementary features: Trusted Publishing and attestations.

Trail of Bits worked with PyPI on Trusted Publishing1, which eliminates the need for long-lived API tokens. Instead of storing secrets that can be stolen, developers configure a trust relationship once: “this GitHub repository and workflow can publish this package.” When the workflow runs, GitHub sends a short-lived OIDC token to PyPI with claims about the repository and workflow. PyPI verifies this token was signed by GitHub’s key and responds with a short-lived PyPI token, which the workflow can use to publish the package. Using automatically generated, minimally scoped, short-lived tokens vastly reduces the risk of compromise.

Without long-lived and over-privileged API tokens, attackers must instead compromise the publishing GitHub workflow itself. While the Ultralytics attack demonstrated that CI/CD pipeline compromise is still a real threat, eliminating the need for users to manually manage credentials removes a source of user error and further reduces the attack surface.

Building on this foundation, Trail of Bits worked with PyPI again to introduce index-hosted digital attestations in late 2024 through PEP 740. Attestations cryptographically bind each published package to its build provenance using Sigstore. Packages using the PyPI publish GitHub action automatically include attestations, which act as a verifiable record of exactly where, when, and how the package was built.

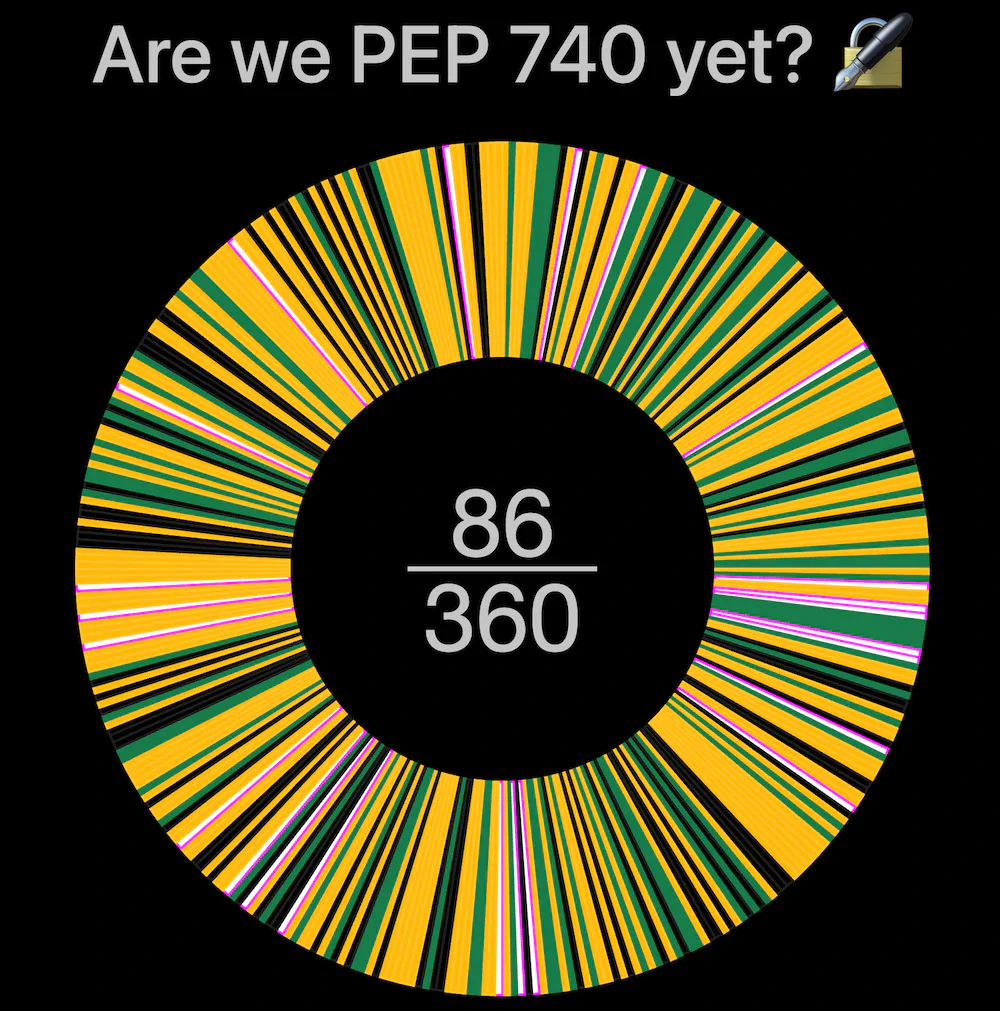

Over 30,000 packages use Trusted Publishing, and “Are We PEP 740 Yet?” tracks attestation adoption among the most popular packages (86 of the top 360 at the time of writing). The final piece, automatic client side verification, remains a work in progress. Client tools like pip and uv do not yet verify attestations automatically. Until then, attestations provide transparency and auditability but not active protection during package installation.

Homebrew build provenance

The implicit trust assumptions extend beyond programming languages and libraries. When you run brew install to install a binary package (or, a bottle), you are trusting that the bottle you’re downloading was built by Homebrew’s official CI from the expected source code and that it was not uploaded by an attacker who found a way to compromise Homebrew’s bottle hosting or otherwise tamper with the bottle’s content.

Trail of Bits, in collaboration with Alpha-Omega and OpenSSF, helped to add build provenance to Homebrew using GitHub’s attestations. Every bottle built by Homebrew now comes with cryptographic proof linking it to the specific GitHub Actions workflow that created it. This makes it significantly harder for a compromised maintainer to silently replace bottles with malicious versions.

% brew verify --help

Usage: brew verify [options] formula [...]

Verify the build provenance of bottles using GitHub's attestation tools.

This is done by first fetching the given bottles and then verifying their provenance.Each attestation includes the Git commit, the workflow that ran, and other build-time metadata. This transforms the trust assumption (“I trust this bottle was built from the source I expect”) into a verifiable fact.

The implementation of attestations handled historical bottles through a “backfilling” process, creating attestations for packages built before the system was in place. As a result, all official Homebrew packages include attestations.

The brew verify command makes it straightforward to check provenance, though the feature is still in beta and verification isn’t automatic by default. There are plans to eventually extend this feature to third-party repositories, bringing the same security guarantees to the broader Homebrew ecosystem.

Go Capslock

Capslock is a tool that statically identifies the capabilities of a Go program, including the following:

- Filesystem operations (reading, writing, deleting files)

- Network connections (outbound requests, listening on ports)

- Process execution (spawning subprocesses)

- Environment variable access

- System call usage

% capslock --packages github.com/fatih/color

Capslock is an experimental tool for static analysis of Go packages.

Share feedback and file bugs at https://github.com/google/capslock.

For additional debugging signals, use verbose mode with -output=verbose

To get machine-readable full analysis output, use -output=jso`

Analyzed packages:

github.com/fatih/color v1.18.0

github.com/mattn/go-colorable v0.1.13

github.com/mattn/go-isatty v0.0.20

golang.org/x/sys v0.25.0

CAPABILITY_FILES: 1 references

CAPABILITY_READ_SYSTEM_STATE: 41 references

CAPABILITY_SYSTEM_CALLS: 1 referencesThis approach represents a shift in supply chain security. Rather than focusing on who wrote the code or where it came from, capability analysis examines what the code can actually do. A JSON parsing library that unexpectedly gains network access raises immediate red flags, regardless of whether the change came from a compromised supply chain or directly from a maintainer.

In practice, static capability detection can be difficult. Language features like runtime reflection and unsafe operations make it impossible to statically detect capabilities entirely accurately. Despite the limitations, capability detection provides a critical safety net as part of a layered defense against supply chain attacks.

Capslock pioneered this approach for Go, and the concept is ripe for adoption across other languages. As supply chain attacks grow more sophisticated, capability analysis offers a promising path forward. Verify what code can do, not just where it comes from.

Where we go from here

Supply chain attacks are not slowing down. If anything, they are becoming more automated, more complex, and more sophisticated in order to target broader audiences. Typosquatting campaigns are targeting packages with billions of downloads, publisher tokens and CI/CD pipelines are being compromised to poison software at the source, and patient attackers are spending years building reputation before striking.

The implicit trust that enabled software ecosystems to scale is being weaponized against us. Understanding your trust assumptions is the first step. Ask yourself these questions:

- Does my ecosystem block typosquatting packages?

- How does it protect against compromised publisher tokens?

- Can I verify build provenance?

- Do I know what capabilities my dependencies have?

Some ecosystems have started building defenses. Know what tools are available and start using them today. Use Trusted Publishing when publishing to PyPI or to crates.io. Check your GitHub Actions with Zizmor. Use It-Depends and Deptective to understand what software actually depends on. Verify attestations where feasible. Use Capslock to see the capabilities of Go packages, and more importantly, be aware when new capabilities are introduced.

But no ecosystem is completely covered. Push for better defaults where tools are lacking. Every verified attestation, every package caught typosquatting, and every flagged vulnerable GitHub action makes the entire industry more resilient. We cannot completely eliminate trust from supply chains, but we can strive to make that trust explicit, verifiable, and revocable.

If you need help understanding your supply chain trust assumptions, contact us.

The crates.io team released Trusted Publishing for Rust crates in July. ↩︎