Trail of Bits' Buttercup wins 2nd place in AIxCC Challenge

On August 8, 2025, it was announced that our team took home the runner-up prize of $3M in DARPA’s Artificial Intelligence Cyber Challenge (AIxCC) at DEF CON 33 in Las Vegas. Team Atlanta, a hybrid team of engineers from Georgia Tech, KAIST, POSTECH, and Samsung Research, won the top prize of $4M, and Theori, with a prize of $1.5M, was the third-place finisher.

AIxCC was a two-year competition open to the public to see who could build the best fully automated system for securing open-source software. The scoring algorithm rewarded teams for finding vulnerabilities, proving that vulnerabilities existed, and correctly applying patches to open-source software. Speed and accuracy were also rewarded. Human interaction was strictly prohibited.

In last year’s semi-finals, the field was whittled down to 7 finalists from 42 competitors. Each of the finalists received $2M to spend the next year refining their cyber reasoning systems (CRSs) for this year’s finals competition. During the final round, there were 48 challenges across 23 open-source repositories. We found 28 vulnerabilities and successfully applied 19 patches.

Yet the real victory goes beyond these numbers. These systems, which collectively took thousands of hours of research and engineering to create, are open-source and available to everyone. Here is what we know so far about how we performed.

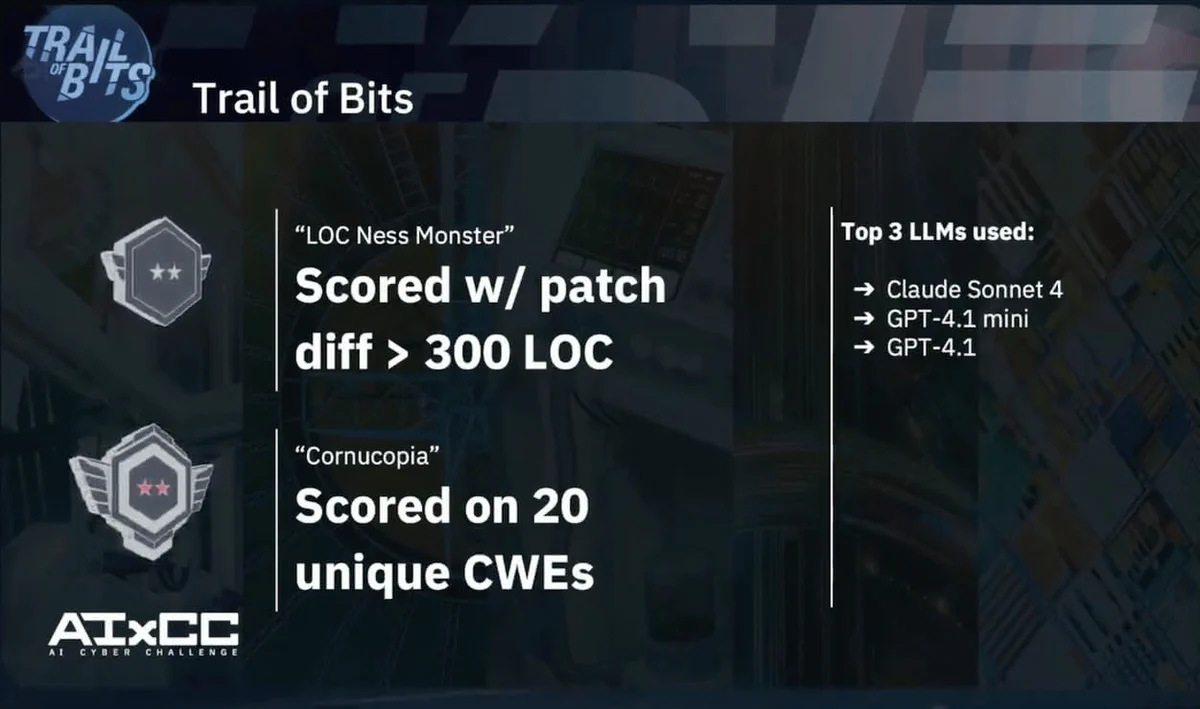

Buttercup found vulnerabilities across 20 CWEs with 90% accuracy

AIxCC challenged competitors to find software vulnerabilities across Mitre’s Top 25 Most Dangerous CWEs, and Buttercup submitted proofs of vulnerabilities (PoVs) across 20 of them. Securing real-world software is more than just uncovering memory leaks and buffer overflows. This breadth demonstrates our system’s robust understanding of diverse vulnerability classes, from memory safety issues to injection flaws.

Other teams also had good CWE coverage, but what separated us from the competition was our ability to bundle discovered bugs with proofs of vulnerabilities (PoVs) and correct patches with a high degree of accuracy. Teams were penalized if their patches were incorrect or inaccurate, and although data from the competition hasn’t been released, we believe that this was a determining factor in our securing a second-place win.

LLMs are money well-spent

Each AIxCC team was given an LLM and a compute budget. The top two teams, Team Atlanta and us, spent the most on LLM queries. Third-place Theori spent roughly half the amount as the top two winners on LLM queries.

Buttercup achieved remarkable efficiency relative to our performance. This efficiency makes our approach particularly valuable for the open-source community, where compute budgets are limited and cost-effectiveness is crucial for widespread adoption. Here’s how the spending compared among the prize winners.

| Team | LLM spend | Compute spend | Total spend | Cost per point |

|---|---|---|---|---|

| Team Atlanta | $29.4k | $73.9k | $103.3k | $263 |

| Trail of Bits | $21.1k | $18.5k | $39.6k | $181 |

| Theori | $11.5k | $20.3k | $31.8k | $151 |

| fuzzing_brain | $12.2k | $63.2k | $75.4k | $490 |

| Shellphish | $2.9k | $54.9k | $57.8k | $425 |

| 42-b3yond-6ug | $1.1k | $38.7k | $39.8k | $379 |

| LACROSSE | $631 | $7.1k | $7.2k | $751 |

Cost per point shows the dollar amount spent on compute and LLM resources to earn each competition point. Trail of Bits achieved remarkable efficiency at just $181 per point, demonstrating that world-class automated vulnerability discovery doesn’t require massive infrastructure investments.

Other Notable Achievements

Our patching system represents a breakthrough in automated code repair. One of our proudest moments was learning that Buttercup submitted the largest software patch, over 300 lines of code, in the entire competition. This shows an understanding of complex codebases and the ability to implement substantial fixes safely and accurately.

Digging more into the results after the awards ceremony, we learned that Buttercup also:

- Scored less than 5 minutes into a task

- Made over 100,000 LLM requests

- Had greater than 90% accuracy

- Found a PoV that triggered a vulnerability that was not inserted into the Challenge

- Scored with a patch that was a one-line change

- Successfully bundled SARIF, PoV, and Patches

What Buttercup can do for you

As a cybersecurity services company with a reputation for government and open-source community engagement, Trail of Bits designed Buttercup with accessibility in mind. Our system is production-ready for automated vulnerability discovery and proves that world-class automated vulnerability discovery and patching don’t require a complex infrastructure. You can download Buttercup today and run it on your laptop.

So how does Buttercup work? It augments both libFuzzer and Jazzer with LLM-generated test cases. It integrates static analysis tools like tree-sitter and code query systems. It uses a multi-agent architecture for intelligent patching with separation of concerns. And it understands call graphs, dependencies, and vulnerability contexts.

Buttercup’s story has only just begun. We’re already exploring ways to optimize the system further, and DARPA and ARPA-H have generously offered each AIxCC team an additional $200,000 to integrate their CRSs into critical software. If you have a code repository that you want to secure with Buttercup, we’d like to hear from you.

DARPA hasn’t yet released all of the AIxCC competition data and telemetry to the competitors, so stay tuned for more blog posts analyzing the results over the coming weeks.

Finally, congratulations to all the teams for challenging us to push the envelope for what can be achieved with AI systems in open-source security. The future of the industry begins today.

For more background, see our previous posts on the AIxCC:

- Buttercup is now open-source!

- AIxCC finals: Tale of the tape

- Buckle up Buttercup: AIxCC’s scored round is underway

- Kicking off AIxCC’s Finals with Buttercup

- Trail of Bits Advances to AIxCC Finals

- Trail of Bits’ Buttercup heads to DARPA’s AIxCC

- DARPA awards $1 million to Trail of Bits for AI Cyber Challenge

- Our thoughts on AIxCC’s competition format

- DARPA’s AI Cyber Challenge: We’re In!

For coverage of the competition in the media:

- DARPA - AI Cyber Challenge marks pivotal inflection point for cyber defense

- ARPA-H - AI Cyber Challenge showcases AI’s Power to secure America’s hospitals and protect patient data

- Axios - Inside the U.S. competition to create AI security tools

- Bloomberg - DARPA’s AI Cyber Contest Awards Security Teams for Fixing Flaws

- OpenSSF - OpenSSF at Black Hat USA 2025 & DEF CON 33: AIxCC Highlights, Big Wins, and the Future of Securing Open Source

- Help Net Security - Buttercup: Open-source AI-driven system detects and patches vulnerabilities

- Cybersecurity Dive - DARPA touts value of AI-powered vulnerability detection as it announces competition winners

- Cyberscoop - DARPA’s AI Cyber Challenge reveals winning models for automated vulnerability discovery and patching

- The Record - DARPA announces $4 million winner of AI code review competition at DEF CON

- Next Gov - DARPA unveils winners of AI challenge to boost critical infrastructure cybersecurity

- Infosecurity Magazine - #DEFCON: AI Cyber Challenge Winners Revealed in DARPA’s $4M Cybersecurity Showdown

- Healthcare IT News - Now available: AI that finds and provides autonomous patching at scale

- Air & Space Forces - Pentagon Contest Develops AI Tools to Find and Patch Dangerous IT Flaws

- MeriTalk - DARPA Announces Winners of AI Cyber Challenge

- Federal News Network - DARPA eyes transition of AI Cyber Challenge tech to ‘widespread use’